Business process management (BPM) is the discipline in which people use various methods to discover, model, analyze, measure, improve, optimize, and automate business processes. Any combination of methods used to manage a company’s business processes is BPM. Processes can be structured and repeatable or unstructured and variable. Though not required, enabling technologies are often used with BPM.

It can be differentiated from program management in that program management is concerned with managing a group of inter-dependent projects. From another viewpoint, process management includes program management. In project management, process management is the use of a repeatable process to improve the outcome of the project.

Key distinctions between process management and project management are repeatability and predictability. If the structure and sequence of work is unique, then it is a project. In business process management, a sequence of work can vary from instance to instance: there are gateways, conditions; business rules etc. The key is predictability: no matter how many forks in the road, we know all of them in advance, and we understand the conditions for the process to take one route or another. If this condition is met, we are dealing with a process.

As an approach, BPM sees processes as important assets of an organization that must be understood, managed, and developed to announce and deliver value-added products and services to clients or customers. This approach closely resembles other total quality management or continual improvement process methodologies.

ISO 9000 promotes the process approach to managing an organization.

…promotes the adoption of a process approach when developing, implementing and improving the effectiveness of a quality management system, to enhance customer satisfaction by meeting customer requirements.

BPM proponents also claim that this approach can be supported, or enabled, through technology. As such, many BPM articles and scholars frequently discuss BPM from one of two viewpoints: people and/or technology.

BPM streamlines business processing by automating workflows; while RPA automates tasks by recording a set of repetitive activities implemented by human. Organizations maximize their business automation leveraging both technologies to achieve better results.

Definitions

The Workflow Management Coalition, BPM.com and several other sources use the following definition:Business process management (BPM) is a discipline involving any combination of modeling, automation, execution, control, measurement and optimization of business activity flows, in support of enterprise goals, spanning systems, employees, customers and partners within and beyond the enterprise boundaries.

The Association of Business Process Management Professionals defines BPM as:Business process management (BPM) is a disciplined approach to identify, design, execute, document, measure, monitor, and control both automated and non-automated business processes to achieve consistent, targeted results aligned with an organization’s strategic goals. BPM involves the deliberate, collaborative and increasingly technology-aided definition, improvement, innovation, and management of end-to-end business processes that drive business results, create value, and enable an organization to meet its business objectives with more agility. BPM enables an enterprise to align its business processes to its business strategy, leading to effective overall company performance through improvements of specific work activities either within a specific department, across the enterprise, or between organizations.

Gartner defines business process management as:”the discipline of managing processes (rather than tasks) as the means for improving business performance outcomes and operational agility. Processes span organizational boundaries, linking together people, information flows, systems, and other assets to create and deliver value to customers and constituents.”

It is common to confuse BPM with a BPM suite (BPMS). BPM is a professional discipline done by people, whereas a BPMS is a technological suite of tools designed to help the BPM professionals accomplish their goals. BPM should also not be confused with an application or solution developed to support a particular process. Suites and solutions represent ways of automating business processes, but automation is only one aspect of BPM.

Changes

The concept of business process may be as traditional as concepts of tasks, department, production, and outputs, arising from job shop scheduling problems in the early 20th century. The management and improvement approach as of 2010, with formal definitions and technical modeling, has been around since the early 1990s (see business process modeling). Note that the term “business process” is sometimes used by IT practitioners as synonymous with the management of middle-ware processes or with integrating application software tasks.

Although BPM initially focused on the automation of business processes with the use of information technology, it has since been extended to integrate human-driven processes in which human interaction takes place in series or parallel with the use of technology. For example, workflow management systems can assign individual steps requiring deploying human intuition or judgment to relevant humans and other tasks in a workflow to a relevant automated system.

More recent variations such as “human interaction management” are concerned with the interaction between human workers performing a task.

As of 2010, technology has allowed the coupling of BPM with other methodologies, such as Six Sigma. Some BPM tools such as SIPOCs, process flows, RACIs, CTQs and histograms allow users to:

- visualize – functions and processes

- measure – determine the appropriate measure to determine success

- analyze – compare the various simulations to determine an optimal improvement

- improve – select and implement the improvement

- control – deploy this implementation and by use of user-defined dashboards monitor the improvement in real time and feed the performance information back into the simulation model in preparation for the next improvement iteration

- re-engineer – revamp the processes from scratch for better results

This brings with it the benefit of being able to simulate changes to business processes based on real-world data (not just on assumed knowledge). Also, the coupling of BPM to industry methodologies allows users to continually streamline and optimize the process to ensure that it is tuned to its market need.

As of 2012 research on BPM has paid increasing attention to the compliance of business processes. Although a key aspect of business processes is flexibility, as business processes continuously need to adapt to changes in the environment, compliance with business strategy, policies, and government regulations should also be ensured. The compliance aspect in BPM is highly important for governmental organizations. As of 2010 BPM approaches in a governmental context largely focus on operational processes and knowledge representation. There have been many technical studies on operational business processes in the public and private sectors, but researchers rarely take legal compliance activities into account—for instance, the legal implementation processes in public-administration bodies.

Life-cycle

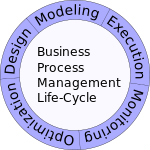

Business process management activities can be arbitrarily grouped into categories such as design, modeling, execution, monitoring, and optimization.

Design

Process design encompasses both the identification of existing processes and the design of “to-be” processes. Areas of focus include representation of the process flow, the factors within it, alerts and notifications, escalations, standard operating procedures, service level agreements, and task hand-over mechanisms. Whether or not existing processes are considered, the aim of this step is to ensure a correct and efficient new design.

The proposed improvement could be in human-to-human, human-to-system or system-to-system workflows, and might target regulatory, market, or competitive challenges faced by the businesses. Existing processes and design of a new process for various applications must synchronize and not cause a major outage or process interruption.

Modeling

Modeling takes the theoretical design and introduces combinations of variables (e.g., changes in rent or materials costs, which determine how the process might operate under different circumstances).

It may also involve running “what-if analysis”(Conditions-when, if, else) on the processes: “What if I have 75% of resources to do the same task?” “What if I want to do the same job for 80% of the current cost?”.

Execution

Business process execution is broadly about enacting a discovered and modeled business process. Enacting a business process is done manually or automatically or with a combination of manual and automated business tasks. Manual business processes are human-driven. Automated business processes are software-driven. Business process automation encompasses methods and software deployed for automating business processes.

Business process automation is performed and orchestrated at the business process layer or the consumer presentation layer of SOA Reference Architecture. BPM software suites such as BPMS or iBPMS or low-code platforms are positioned at the business process layer. While the emerging robotic process automation software performs business process automation at the presentation layer, therefore is considered non-invasive to and de-coupled from existing application systems.

One of the ways to automate processes is to develop or purchase an application that executes the required steps of the process; however, in practice, these applications rarely execute all the steps of the process accurately or completely. Another approach is to use a combination of software and human intervention; however this approach is more complex, making the documentation process difficult.

In response to these problems, companies have developed software that defines the full business process (as developed in the process design activity) in a computer language that a computer can directly execute. Process models can be run through execution engines that automate the processes directly from the model (e.g., calculating a repayment plan for a loan) or, when a step is too complex to automate, Business Process Modeling Notation (BPMN) provides front-end capability for human input. Compared to either of the previous approaches, directly executing a process definition can be more straightforward and therefore easier to improve. However, automating a process definition requires flexible and comprehensive infrastructure, which typically rules out implementing these systems in a legacy IT environment.

Business rules have been used by systems to provide definitions for governing behavior, and a business rule engine can be used to drive process execution and resolution.

Monitoring

Monitoring encompasses the tracking of individual processes, so that information on their state can be easily seen, and statistics on the performance of one or more processes can be provided. An example of this tracking is being able to determine the state of a customer order (e.g. order arrived, awaiting delivery, invoice paid) so that problems in its operation can be identified and corrected.

In addition, this information can be used to work with customers and suppliers to improve their connected processes. Examples are the generation of measures on how quickly a customer order is processed or how many orders were processed in the last month. These measures tend to fit into three categories: cycle time, defect rate and productivity.

The degree of monitoring depends on what information the business wants to evaluate and analyze and how the business wants it monitored, in real-time, near real-time or ad hoc. Here, business activity monitoring (BAM) extends and expands the monitoring tools generally provided by BPMS.

Process mining is a collection of methods and tools related to process monitoring. The aim of process mining is to analyze event logs extracted through process monitoring and to compare them with an a priori process model. Process mining allows process analysts to detect discrepancies between the actual process execution and the a priori model as well as to analyze bottlenecks.

Predictive Business Process Monitoring concerns the application of data mining, machine learning, and other forecasting techniques to predict what is going to happen with running instances of a business process, allowing to make forecasts of future cycle time, compliance issues, etc. Techniques for predictive business process monitoring include Support Vector Machines, Deep Learning approaches, and Random Forest.

Optimization

Process optimization includes retrieving process performance information from modeling or monitoring phase; identifying the potential or actual bottlenecks and the potential opportunities for cost savings or other improvements; and then, applying those enhancements in the design of the process. Process mining tools are able to discover critical activities and bottlenecks, creating greater business value.

Re-engineering

When the process becomes too complex or inefficient, and optimization is not fetching the desired output, it is usually recommended by a company steering committee chaired by the president / CEO to re-engineer the entire process cycle. Business process reengineering (BPR) has been used by organizations to attempt to achieve efficiency and productivity at work.

Suites

A market has developed for enterprise software leveraging the business process management concepts to organize and automate processes. The recent convergence of this software from distinct pieces such as business rules engine, business process modelling, business activity monitoring and Human Workflow has given birth to integrated Business Process Management Suites. Forrester Research, Inc recognize the BPM suite space through three different lenses:

- human-centric BPM

- integration-centric BPM (Enterprise Service Bus)

- document-centric BPM (Dynamic Case Management)

However, standalone integration-centric and document-centric offerings have matured into separate, standalone markets.

Rapid application development using no-code/low-code principles is becoming an ever prevalent feature of BPMS platforms. RAD enables businesses to deploy applications more quickly and more cost effectively, while also offering improved change and version management. Gartner notes that as businesses embrace these systems, their budgets rely less on the maintenance of existing systems and show more investment in growing and transforming them.

Practice

While the steps can be viewed as a cycle, economic or time constraints are likely to limit the process to only a few iterations. This is often the case when an organization uses the approach for short to medium term objectives rather than trying to transform the organizational culture. True iterations are only possible through the collaborative efforts of process participants. In a majority of organizations, complexity requires enabling technology (see below) to support the process participants in these daily process management challenges.

To date, many organizations often start a BPM project or program with the objective of optimizing an area that has been identified as an area for improvement.

Currently, the international standards for the task have limited BPM to the application in the IT sector, and ISO/IEC 15944 covers the operational aspects of the business. However, some corporations with the culture of best practices do use standard operating procedures to regulate their operational process. Other standards are currently being worked upon to assist in BPM implementation (BPMN, enterprise architecture, Business Motivation Model).

Technology

BPM is now considered a critical component of operational intelligence (OI) solutions to deliver real-time, actionable information. This real-time information can be acted upon in a variety of ways – alerts can be sent or executive decisions can be made using real-time dashboards. OI solutions use real-time information to take automated action based on pre-defined rules so that security measures and or exception management processes can be initiated. Because “the size and complexity of daily tasks often requires the use of technology to model efficiently” when resources in technology became increasingly widespread with general availability to businesses to provide to their staff, “Many thought BPM as the bridge between Information Technology (IT) and Business.”

There are four critical components of a BPM Suite:

- Process engine – a robust platform for modeling and executing process-based applications, including business rules

- Business analytics – enable managers to identify business issues, trends, and opportunities with reports and dashboards and react accordingly

- Content management – provides a system for storing and securing electronic documents, images, and other files

- Collaboration tools – remove intra- and interdepartmental communication barriers through discussion forums, dynamic workspaces, and message boards

BPM also addresses many of the critical IT issues underpinning these business drivers, including:

- Managing end-to-end, customer-facing processes

- Consolidating data and increasing visibility into and access to associated data and information

- Increasing the flexibility and functionality of current infrastructure and data

- Integrating with existing systems and leveraging service oriented architecture (SOA)

- Establishing a common language for business-IT alignment

Validation of BPMS is another technical issue that vendors and users must be aware of, if regulatory compliance is mandatory. The validation task could be performed either by an authenticated third party or by the users themselves. Either way, validation documentation must be generated. The validation document usually can either be published officially or retained by users.

Cloud computing BPM

Cloud computing business process management is the use of (BPM) tools that are delivered as software services (SaaS) over a network. Cloud BPM business logic is deployed on an application server and the business data resides in cloud storage.

Market

According to Gartner, 20% of all the “shadow business processes” are supported by BPM cloud platforms. Gartner refers to all the hidden organizational processes that are supported by IT departments as part of legacy business processes such as Excel spreadsheets, routing of emails using rules, phone calls routing, etc. These can, of course also be replaced by other technologies such as workflow and smart form software.

Benefits

The benefits of using cloud BPM services include removing the need and cost of maintaining specialized technical skill sets in-house and reducing distractions from an enterprise’s main focus. It offers controlled IT budgeting and enables geographical mobility.

Internet of things

The emerging Internet of things poses a significant challenge to control and manage the flow of information through large numbers of devices. To cope with this, a new direction known as BPM Everywhere shows promise as a way of blending traditional process techniques, with additional capabilities to automate the handling of all the independent devices.

Information technology

Information technology (IT) is the use of computers to create, process, store, retrieve, and exchange all kinds of data and information. IT forms part of information and communications technology (ICT). An information technology system (IT system) is generally an information system, a communications system, or, more specifically speaking, a computer system — including all hardware, software, and peripheral equipment — operated by a limited group of IT users.

Although humans have been storing, retrieving, manipulating, and communicating information since the earliest writing systems were developed, the term information technology in its modern sense first appeared in a 1958 article published in the Harvard Business Review; authors Harold J. Leavitt and Thomas L. Whisler commented that “the new technology does not yet have a single established name. We shall call it information technology (IT).” Their definition consists of three categories: techniques for processing, the application of statistical and mathematical methods to decision-making, and the simulation of higher-order thinking through computer programs.

The term is commonly used as a synonym for computers and computer networks, but it also encompasses other information distribution technologies such as television and telephones. Several products or services within an economy are associated with information technology, including computer hardware, software, electronics, semiconductors, internet, telecom equipment, and e-commerce.

Based on the storage and processing technologies employed, it is possible to distinguish four distinct phases of IT development: pre-mechanical (3000 BC — 1450 AD), mechanical (1450—1840), elector mechanical (1840—1940), and electronic (1940 to present).

Information technology is also a branch of computer science, which can be defined as the overall study of procedure, structure, and the processing of various types of data. As this field continues to evolve across the world, the overall priority and importance has also grown, which is where we begin to see the introduction of computer science-related courses in K-12 education.

Data processing

Storage

Early electronic computers such as Colossus made use of punched tape, a long strip of paper on which data was represented by a series of holes, a technology now obsolete. Electronic data storage, which is used in modern computers, dates from World War II, when a form of delay-line memory was developed to remove the clutter from radar signals, the first practical application of which was the mercury delay line. The first random-access digital storage device was the Williams tube, which was based on a standard cathode ray tube. However, the information stored in it and delay-line memory was volatile in the fact that it had to be continuously refreshed, and thus was lost once power was removed. The earliest form of non-volatile computer storage was the magnetic drum, invented in 1932 and used in the Ferranti Mark 1, the world’s first commercially available general-purpose electronic computer.

IBM introduced the first hard disk drive in 1956, as a component of their 305 RAMAC computer system. Most digital data today is still stored magnetically on hard disks, or optically on media such as CD-ROMs. Until 2002 most information was stored on analog devices, but that year digital storage capacity exceeded analog for the first time. As of 2007, almost 94% of the data stored worldwide was held digitally: 52% on hard disks, 28% on optical devices, and 11% on digital magnetic tape. It has been estimated that the worldwide capacity to store information on electronic devices grew from less than 3 exabytes in 1986 to 295 exabytes in 2007, doubling roughly every 3 years.

Databases

Database Management Systems (DMS) emerged in the 1960s to address the problem of storing and retrieving large amounts of data accurately and quickly. An early such system was IBM’s Information Management System (IMS), which is still widely deployed more than 50 years later. IMS stores data hierarchically, but in the 1970s Ted Codd proposed an alternative relational storage model based on set theory and predicate logic and the familiar concepts of tables, rows, and columns. In 1981, the first commercially available relational database management system (RDBMS) was released by Oracle.

All DMS consist of components, they allow the data they store to be accessed simultaneously by many users while maintaining its integrity. All databases are common in one point that the structure of the data they contain is defined and stored separately from the data itself, in a database schema.

In recent years, the extensible markup language (XML) has become a popular format for data representation. Although XML data can be stored in normal file systems, it is commonly held in relational databases to take advantage of their “robust implementation verified by years of both theoretical and practical effort.” As an evolution of the Standard Generalized Markup Language (SGML), XML’s text-based structure offers the advantage of being both machine and human-readable.

Transmission

Data transmission has three aspects: transmission, propagation, and reception. It can be broadly categorized as broadcasting, in which information is transmitted unidirectionally downstream, or telecommunications, with bidirectional upstream and downstream channels.

XML has been increasingly employed as a means of data interchange since the early 2000s, particularly for machine-oriented interactions such as those involved in web-oriented protocols such as SOAP, describing “data-in-transit rather than… data-at-rest”.

Manipulation

Hilbert and Lopez identify the exponential pace of technological change (a kind of Moore’s law): machines’ application-specific capacity to compute information per capita roughly doubled every 14 months between 1986 and 2007; the per capita capacity of the world’s general-purpose computers doubled every 18 months during the same two decades; the global telecommunication capacity per capita doubled every 34 months; the world’s storage capacity per capita required roughly 40 months to double (every 3 years); and per capita broadcast information has doubled every 12.3 years.

Massive amounts of data are stored worldwide every day, but unless it can be analyzed and presented effectively it essentially resides in what have been called data tombs: “data archives that are seldom visited”. To address that issue, the field of data mining — “the process of discovering interesting patterns and knowledge from large amounts of data” — emerged in the late 1980s.

Services

The technology and services it provides for sending and receiving electronic messages (called “letters” or “electronic letters”) over a distributed (including global) computer network. In terms of the composition of elements and the principle of operation, electronic mail practically repeats the system of regular (paper) mail, borrowing both terms (mail, letter, envelope, attachment, box, delivery, and others) and characteristic features — ease of use, message transmission delays, sufficient reliability and at the same time no guarantee of delivery. The advantages of e-mail are: easily perceived and remembered by a person addresses of the form user_name@domain_name (for example, somebody@example.com); the ability to transfer both plain text and formatted, as well as arbitrary files; independence of servers (in the general case, they address each other directly); sufficiently high reliability of message delivery; ease of use by humans and programs.

Disadvantages of e-mail: the presence of such a phenomenon as spam (massive advertising and viral mailings); the theoretical impossibility of guaranteed delivery of a particular letter; possible delays in message delivery (up to several days); limits on the size of one message and on the total size of messages in the mailbox (personal for users).

Search system

A software and hardware complex with a web interface that provides the ability to search for information on the Internet. A search engine usually means a site that hosts the interface (front-end) of the system. The software part of a search engine is a search engine (search engine) — a set of programs that provides the functionality of a search engine and is usually a trade secret of the search engine developer company. Most search engines look for information on World Wide Web sites, but there are also systems that can look for files on FTP servers, items in online stores, and information on Usenet newsgroups. Improving search is one of the priorities of the modern Internet (see the Deep Web article about the main problems in the work of search engines).

Commercial effects

Companies in the information technology field are often discussed as a group as the “tech sector” or the “tech industry.” These titles can be misleading at times and should not be mistaken for “tech companies;” which are generally large scale, for-profit corporations that sell consumer technology and software. It is also worth noting that from a business perspective, Information Technology departments are a “cost center” the majority of the time. A cost center is a department or staff which incurs expenses, or “costs,” within a company rather than generating profits or revenue streams. Modern businesses rely heavily on technology for their day-to-day operations, so the expenses delegated to cover technology that facilitates business in a more efficient manner are usually seen as “just the cost of doing business.” IT departments are allocated funds by senior leadership and must attempt to achieve the desired deliverables while staying within that budget. Government and the private sector might have different funding mechanisms, but the principles are more-or-less the same. This is an often overlooked reason for the rapid interest in automation and Artificial Intelligence, but the constant pressure to do more with less is opening the door for automation to take control of at least some minor operations in large companies.

Many companies now have IT departments for managing the computers, networks, and other technical areas of their businesses. Companies have also sought to integrate IT with business outcomes and decision-making through a Biz Ops or business operations department.

In a business context, the Information Technology Association of America has defined information technology as “the study, design, development, application, implementation, support, or management of computer-based information systems”. The responsibilities of those working in the field include network administration, software development and installation, and the planning and management of an organization’s technology life cycle, by which hardware and software are maintained, upgraded, and replaced.

Information services

Information services is a term somewhat loosely applied to a variety of IT-related services offered by commercial companies, as well as data brokers.

- U.S. Employment distribution of computer systems design and related services, 2011

- U.S. Employment in the computer systems and design related services industry, in thousands, 1990-2011

- U.S. Occupational growth and wages in computer systems design and related services, 2010-2020

- U.S. projected percent change in employment in selected occupations in computer systems design and related services, 2010-2020

- U.S. projected average annual percent change in output and employment in selected industries, 2010-2020

Ethics

The field of information ethics was established by mathematician Norbert Wiener in the 1940s. Some of the ethical issues associated with the use of information technology include:

- Breaches of copyright by those downloading files stored without the permission of the copyright holders

- Employers monitoring their employees’ emails and other Internet usage

- Unsolicited emails

- Hackers accessing online databases

- Web sites installing cookies or spyware to monitor a user’s online activities, which may be used by data brokers